In OIM 11g, Design Console still is a required tool for system configuration, custom development and customization. But differently from OIM 9.x, Design Console 11g does not have its own installer anymore. It is installed and configured along with the OIM server installation.

One of the common questions around Design Console 11g is: if there is no installer anymore, how do I get it working on my desktop/laptop without installing the whole Identity and Access Management pack?

This is an easy task and this post describes the steps for getting it done:

1. If you don't have a JDK 1.6 in your laptop, you will have to install it.

2. Run the configuration script for OIM once again. The script is available at $IAM_HOME/bin (where IAM_HOME is the folder where the ‘Identity and Access Management Pack’ was installed). You have to run the ‘config.sh’ that is available at $IAM_HOME/bin folder and NOT the one available at ‘$IAM_HOME/common/bin/config.sh’

3. In the configuration wizard, select ‘Design Console’ checkbox ONLY.

4. In the next screen, enter the OIM server host and port name. The wizard will configure the Design Console files for you

Friday, April 29, 2011

Tuesday, April 26, 2011

OBIEE 10g SSO Integration with OAM 11g

In this post I share the necessary steps in order to integrate OBIEE 10g into OAM 11g Single Sign On with the little caveat that OBIEE Analytics application is deployed in Weblogic server.

OBIEE 10g has two installation modes: basic and advanced. For SSO integration, you must pick adavanced mode. And Oracle Application Server version 10.1.3.1.0 or later is required.

OBIEE 10g deployment guide states that it can be implemented with any SSO solution that uses cookies, http header variables or JavaEE container server variables. That’s true indeed, and most of the configuration is actually performed on the OBIEE side.

OBIEE 10g implements SSO through the concept of impersonation. It retrieves the end user identity through one of the mechanisms mentioned above and uses an impersonator user to establish a session to the OBIEE server on behalf of the end user.

This post by no means intends to discuss OBIEE architecture or go into the details of OAM 11g. As a matter of fact, the latter is extensively discussed in this blog by my colleagues in the Oracle Access Manager Academy series. Reading strongly recommended. Fantastic material.

And what you’re about to follow has been implemented in a Windows XP box for demonstration purposes.

The exact product versions used were:

Oracle Business Intelligence Enterprise Edition 10.1.3.4.1

Oracle Identity and Access Management 11.1.1.3.0

Oracle Access Manager WebGates 11.1.1.3.0

Oracle Identity Management 11.1.1.3.0

Oracle WebTier Utilities 11.1.1.2.0

Oracle Weblogic Server 10.3.4

Oracle Containers For Java (OC4J) 10.1.3.5.0

Fasten your seat belts! Here we go.

When you install OBIEE 10g, you get a set of standalone component processes, some admin UIs and a front-end web application running on top of OC4J.

For the purposes of this post, we’re interested in the BI Server, BI Presentation Services and the BI Presentation Services Plug-in components. The BI Server is a standalone process that maintains the BI data model and connects to data stores. BI Presentation Services is another standalone process that present information worked by BI Server to clients via ODBC. BI Presentation Services Plug-in allows web clients to interact with BI Presentation Services. In JavaEE application servers, it is a servlet component delivered via the analytics.war web application.

Once OBIEE is installed, find the analytics.war file under $BI_HOME/web folder.

Shut down OC4J in case it's running. We don't need it.

Simply use Weblogic console to deploy the analytics.war application. There really is nothing special here. Click click click and you should get the analytics application up and running in Weblogic.

OHS front-ends Weblogic server. A mod weblogic routing rule will forward requests to the analytics application running in Weblogic.

This step can also be accomplished via Enterprise Manager.

Open mod_wl_ohs.conf located under your OHS instance home config folder and type in the following:

Make sure to replace the values in between <!-- -->

The OHS instance home config folder is typically located at $ORACLE_HOME/instances/<instance-name>/config/OHS/ohs1

Restart OHS.

Checkpoint 1: at this point we should be able to submit requests to OHS and have them directed to Weblogic.

Nothing special here. Just follow OAM 11g install guide. It is a good idea to create one Weblogic domain along with one managed server for the OAM server.

The WebGate checks whether the executing user is authenticated before letting it access the analytics application.

Simply follow OAM Administration Guide Instructions.

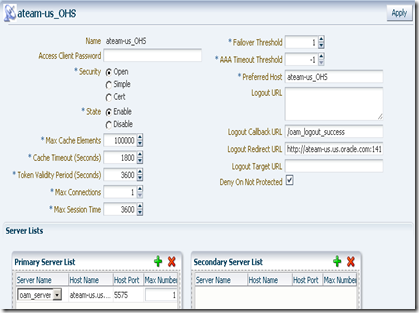

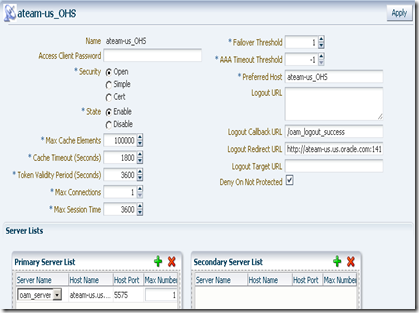

Here’s my WebGate definition:

On registration, by default, you get an application domain and Authentication and Authorization policies automatically configured for the patterns / and /…/*. You don’t need those policies. Remove them and add the /analytics/…/* as a protected resource to the set of Authentication and Authorization policies.

And make sure you copy the generated ObAccessConfig.xml and cwallet.sso from the OAM’s $DOMAIN_HOME/output/<agent-name> to the WebGate’s instance config folder, which is typically located at OHS’ $ORACLE_HOME/instances/<instance-name>/config/OHS/ohs1/webgate/config.

<agent-name> is the name you gave to your WebGate when you registered it in the OAM Console.

Restart both OAM access server and the WebGate.

Checkpoint 2: At this point we should be able to have the WebGate intercepting calls to /analytics URL running in WebLogic and asking for credentials. Upon entering them, the user would be re-challenged by BI login screen.

Connect to the BI Administrator tool and select Manage –> Security.

Select User, right click on the panel’s right side, select New User… and type in the user name. For this exercise, I am calling it Impersonator. Make sure it is a member of the Administrators group.

Navigate to BI’s home web/bin folder and type:

You are prompted for some information. Make sure the Credential Alias is impersonation (literally). Username and password should obviously match those you just provided in the previous step. Encrypt the password and give it a passphrase.

instanceconfig.xml is also located at <OracleBIData>/web/config.

In my case, <OracleBIData> is C:\OracleBIData.

In order to allow BI Presentation Services connecting to BI Server using the Impersonator user, add the following snippet as a child of <ServerInstance> element:

Make sure to enter the passphrase you chose previously.

In order to allow BI Presentation Services consuming the end user identity authenticated by OAM, add the following as a child of <ServerInstance> element as well:

Here we’re instructing BI Presentation Services to use the OAM_REMOTE_USER http header value as the “impersonatee” user. OMA_REMOTE_USER is always put in the HTTP header by OAM upon successful authentication. BI will simply trust that. Dangerous? Oh yes.

Don NOT go to production without implementing a trusting mechanism between Weblogic and OHS. Weblogic should only accept requests from OHS. And the solutions to the rescue are 2-way SSL or some firewalling protecting Weblogic. Don’t let anyone sending requests directly to Weblogic!

Restart BI Server and BI Presentation Services processes.

Checkpoint 3: At this point SSO should work for /analytics. After getting challenged by OAM on accessing /analytics/saw.dll?Dashboard, you should be let in without any further authentication challenge by BI.

Notice that we still have two user repositories. OAM is looking at the Weblogic embedded LDAP server while BI is looking at its internal repository. That assumes the user is defined in both identity stores.

OBIEE 10g has the option of importing users and groups to its internal repository from external systems. That’s certainly an option, but it involves synchronization, which I am not a great fan of. Import and synchronization are available in the BI Administration tool.

If you seek a single identity store, keep reading.

The application policy domain created when we registered our WebGate uses Weblogic embedded LDAP server as the identity store by default.

We need to change it, by pointing it to an external LDAP server. OID being the choice here.

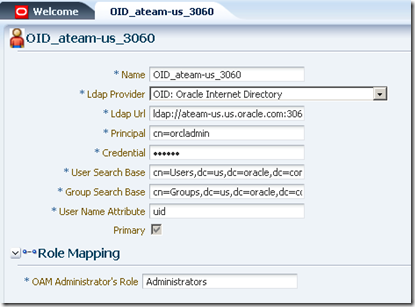

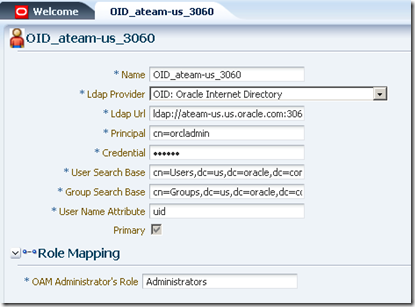

This is done in OAM console. On the System Configuration tab, expand the Data Sources node and select User Identity Stores. Click the New button on the tool bar. Here’s my definition:

Then associate this identity store to the authentication scheme that is associated with the authentication policy protecting the /analytics/…/* pattern. This is done under Authentication Modules node on the System Configuration tab:

LDAP is the authentication module defined for the authentication scheme protecting our /analytics/…/* pattern.

Restart OAM server.

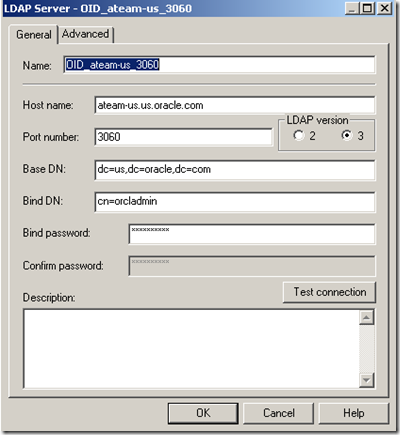

Using BI Administration tool, define the LDAP server. Go to Manage –> Security –> New… –> LDAP Server

Click the Advanced tab and inform uid as the User name attribute type. uid is the attribute that univocally identifies the user in OID.

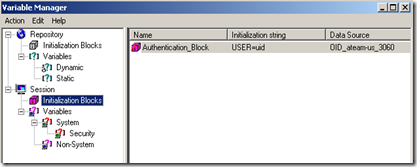

Using the BI Administration tool, go to Manage –> Variables. On the left side panel, under Session, select System. Right click on the right side and pick New USER…

Initialization blocks are the means by which external repository data is communicated to BI server.

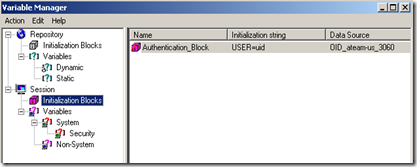

Again, using BI Administration tool, go to Manage –> Variables. Click Session. Right click on the right side and pick New Initialization Block… Give it a name, like Authentication Block.

Under Data Source, click Edit Data Source… button, pick LDAP as the type, click Browse button and pick the LDAP server you’ve defined previously.

Under Variable Target, pick the USER variable you’ve created. Inform uid as the LDAP Variable value.

You should end up with something like this:

Restart BI Server.

Checkpoint 4: at this point, you should be able to login with a user defined in OID and access the BI analytics application in SSO mode, but you’ll notice that the privileges within BI analytics look wrong.

Unfortunately, OBIEE 10g does not retrieve group memberships directly from LDAP. But it is possible to implement it indirectly, by creating a virtual table in the Oracle database populated with LDAP user/group information (that can be done with DBMS_LDAP package).

Another option would be writing a SQL query directly against OID tables, but that’s too invasive and could break at any time due to changes in the OID schema, which is private.

Once you populate a table using DBMS_LDAP package, you can query it via a second Initialization Block and retrieve the group names for a given user, populating the GROUP session variable. This block should refer the Authentication initialization block we’ve defined earlier as a predecessor so that the USER variable is properly initialized with the authenticated user.

I am not done with this part yet. As time permits, I will come back with the virtual table definition as well as the initialization block.

But I guess there's already plenty to do in case you want to try this out. Let me know about your experiences.

Refer to product documentation.

OBIEE 10g Documentation Library (Deployment Guide has most of the information presented here).

OAM 11g Administration Guide

OBIEE 10g has two installation modes: basic and advanced. For SSO integration, you must pick adavanced mode. And Oracle Application Server version 10.1.3.1.0 or later is required.

OBIEE 10g deployment guide states that it can be implemented with any SSO solution that uses cookies, http header variables or JavaEE container server variables. That’s true indeed, and most of the configuration is actually performed on the OBIEE side.

OBIEE 10g implements SSO through the concept of impersonation. It retrieves the end user identity through one of the mechanisms mentioned above and uses an impersonator user to establish a session to the OBIEE server on behalf of the end user.

This post by no means intends to discuss OBIEE architecture or go into the details of OAM 11g. As a matter of fact, the latter is extensively discussed in this blog by my colleagues in the Oracle Access Manager Academy series. Reading strongly recommended. Fantastic material.

And what you’re about to follow has been implemented in a Windows XP box for demonstration purposes.

The exact product versions used were:

Oracle Business Intelligence Enterprise Edition 10.1.3.4.1

Oracle Identity and Access Management 11.1.1.3.0

Oracle Access Manager WebGates 11.1.1.3.0

Oracle Identity Management 11.1.1.3.0

Oracle WebTier Utilities 11.1.1.2.0

Oracle Weblogic Server 10.3.4

Oracle Containers For Java (OC4J) 10.1.3.5.0

Fasten your seat belts! Here we go.

1 - Install OBIEE 10g

When you install OBIEE 10g, you get a set of standalone component processes, some admin UIs and a front-end web application running on top of OC4J.

For the purposes of this post, we’re interested in the BI Server, BI Presentation Services and the BI Presentation Services Plug-in components. The BI Server is a standalone process that maintains the BI data model and connects to data stores. BI Presentation Services is another standalone process that present information worked by BI Server to clients via ODBC. BI Presentation Services Plug-in allows web clients to interact with BI Presentation Services. In JavaEE application servers, it is a servlet component delivered via the analytics.war web application.

Once OBIEE is installed, find the analytics.war file under $BI_HOME/web folder.

Shut down OC4J in case it's running. We don't need it.

2 - Deploy the analytics.war application in WebLogic

Simply use Weblogic console to deploy the analytics.war application. There really is nothing special here. Click click click and you should get the analytics application up and running in Weblogic.

3 - Install Oracle HTTP Server (OHS)

OHS front-ends Weblogic server. A mod weblogic routing rule will forward requests to the analytics application running in Weblogic.

4 - Create routing rule in OHS mod weblogic for /analytics URL

This step can also be accomplished via Enterprise Manager.

Open mod_wl_ohs.conf located under your OHS instance home config folder and type in the following:

1: <IfModule weblogic_module>

2: WebLogicHost <!--weblogic-server-running-analytics-application-->

3: WebLogicPort <!--weblogic-port-->

4: Debug ON 5: WLLogFile /tmp/weblogic.log6: </IfModule>

7: 8: <Location /analytics>

9: SetHandler weblogic-handler10: </Location>

Make sure to replace the values in between <!-- -->

The OHS instance home config folder is typically located at $ORACLE_HOME/instances/<instance-name>/config/OHS/ohs1

Restart OHS.

Checkpoint 1: at this point we should be able to submit requests to OHS and have them directed to Weblogic.

5 - Install OAM 11g

Nothing special here. Just follow OAM 11g install guide. It is a good idea to create one Weblogic domain along with one managed server for the OAM server.

6 - Install OAM 11g WebGate in OHS

The WebGate checks whether the executing user is authenticated before letting it access the analytics application.

7 – Register the WebGate in OAM Console

Simply follow OAM Administration Guide Instructions.

Here’s my WebGate definition:

On registration, by default, you get an application domain and Authentication and Authorization policies automatically configured for the patterns / and /…/*. You don’t need those policies. Remove them and add the /analytics/…/* as a protected resource to the set of Authentication and Authorization policies.

And make sure you copy the generated ObAccessConfig.xml and cwallet.sso from the OAM’s $DOMAIN_HOME/output/<agent-name> to the WebGate’s instance config folder, which is typically located at OHS’ $ORACLE_HOME/instances/<instance-name>/config/OHS/ohs1/webgate/config.

<agent-name> is the name you gave to your WebGate when you registered it in the OAM Console.

Restart both OAM access server and the WebGate.

Checkpoint 2: At this point we should be able to have the WebGate intercepting calls to /analytics URL running in WebLogic and asking for credentials. Upon entering them, the user would be re-challenged by BI login screen.

8 - Create Impersonator user for the BI Server

Connect to the BI Administrator tool and select Manage –> Security.

Select User, right click on the panel’s right side, select New User… and type in the user name. For this exercise, I am calling it Impersonator. Make sure it is a member of the Administrators group.

9 - Add Impersonator user to BI Presentation Services credential store (credentialstore.xml)

Navigate to BI’s home web/bin folder and type:

> cryptotools credstore–add–infile <OracleBIData>/web/config/credentialstore.xml

10 - Configure instanceconfig.xml

instanceconfig.xml is also located at <OracleBIData>/web/config.

In my case, <OracleBIData> is C:\OracleBIData.

In order to allow BI Presentation Services connecting to BI Server using the Impersonator user, add the following snippet as a child of <ServerInstance> element:

<CredentialStore>

<CredentialStorage

type="file" path="C:\OracleBIData\web\config\credentialstore.xml" passphrase="welcome1"/></CredentialStore>

Make sure to enter the passphrase you chose previously.

In order to allow BI Presentation Services consuming the end user identity authenticated by OAM, add the following as a child of <ServerInstance> element as well:

<Auth>

<SSO enabled="true"><ParamList>

<Param

name="IMPERSONATE" source="httpHeader" nameInSource="OAM_REMOTE_USER"/></ParamList>

</SSO>

</Auth>

Here we’re instructing BI Presentation Services to use the OAM_REMOTE_USER http header value as the “impersonatee” user. OMA_REMOTE_USER is always put in the HTTP header by OAM upon successful authentication. BI will simply trust that. Dangerous? Oh yes.

Don NOT go to production without implementing a trusting mechanism between Weblogic and OHS. Weblogic should only accept requests from OHS. And the solutions to the rescue are 2-way SSL or some firewalling protecting Weblogic. Don’t let anyone sending requests directly to Weblogic!

Restart BI Server and BI Presentation Services processes.

Checkpoint 3: At this point SSO should work for /analytics. After getting challenged by OAM on accessing /analytics/saw.dll?Dashboard, you should be let in without any further authentication challenge by BI.

Notice that we still have two user repositories. OAM is looking at the Weblogic embedded LDAP server while BI is looking at its internal repository. That assumes the user is defined in both identity stores.

OBIEE 10g has the option of importing users and groups to its internal repository from external systems. That’s certainly an option, but it involves synchronization, which I am not a great fan of. Import and synchronization are available in the BI Administration tool.

If you seek a single identity store, keep reading.

11 - Define a new OID identity store in OAM

This step assumes OID has been previously installed. In this exercise, OID version is the one packaged in Oracle Identity Management 11.1.1.3.0.The application policy domain created when we registered our WebGate uses Weblogic embedded LDAP server as the identity store by default.

We need to change it, by pointing it to an external LDAP server. OID being the choice here.

This is done in OAM console. On the System Configuration tab, expand the Data Sources node and select User Identity Stores. Click the New button on the tool bar. Here’s my definition:

Then associate this identity store to the authentication scheme that is associated with the authentication policy protecting the /analytics/…/* pattern. This is done under Authentication Modules node on the System Configuration tab:

LDAP is the authentication module defined for the authentication scheme protecting our /analytics/…/* pattern.

Restart OAM server.

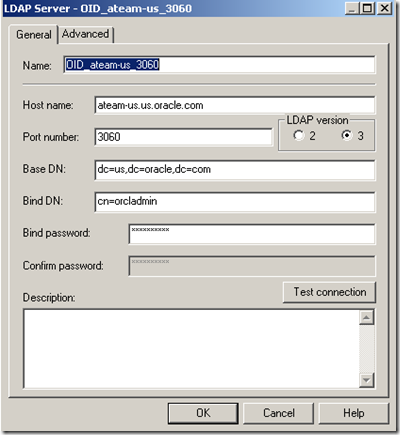

12 - Create an LDAP server in BI Server (the same OID identity store above)

Using BI Administration tool, define the LDAP server. Go to Manage –> Security –> New… –> LDAP Server

Click the Advanced tab and inform uid as the User name attribute type. uid is the attribute that univocally identifies the user in OID.

13 - Create a USER session variable in BI Server.

* Defining a USER session variable tells BI Server to authenticate users in an external repository. But in case of conflicting usernames, users defined in the BI repository takes precedence.Using the BI Administration tool, go to Manage –> Variables. On the left side panel, under Session, select System. Right click on the right side and pick New USER…

14 - Create an LDAP Initialization Block for authenticating users in OID.

Initialization blocks are the means by which external repository data is communicated to BI server.

Again, using BI Administration tool, go to Manage –> Variables. Click Session. Right click on the right side and pick New Initialization Block… Give it a name, like Authentication Block.

Under Data Source, click Edit Data Source… button, pick LDAP as the type, click Browse button and pick the LDAP server you’ve defined previously.

Under Variable Target, pick the USER variable you’ve created. Inform uid as the LDAP Variable value.

You should end up with something like this:

Restart BI Server.

Checkpoint 4: at this point, you should be able to login with a user defined in OID and access the BI analytics application in SSO mode, but you’ll notice that the privileges within BI analytics look wrong.

15 – Implementing authorizations for BI using groups defined in an external LDAP server.

Unfortunately, OBIEE 10g does not retrieve group memberships directly from LDAP. But it is possible to implement it indirectly, by creating a virtual table in the Oracle database populated with LDAP user/group information (that can be done with DBMS_LDAP package).

Another option would be writing a SQL query directly against OID tables, but that’s too invasive and could break at any time due to changes in the OID schema, which is private.

Once you populate a table using DBMS_LDAP package, you can query it via a second Initialization Block and retrieve the group names for a given user, populating the GROUP session variable. This block should refer the Authentication initialization block we’ve defined earlier as a predecessor so that the USER variable is properly initialized with the authenticated user.

I am not done with this part yet. As time permits, I will come back with the virtual table definition as well as the initialization block.

But I guess there's already plenty to do in case you want to try this out. Let me know about your experiences.

For more details…

Refer to product documentation.

OBIEE 10g Documentation Library (Deployment Guide has most of the information presented here).

OAM 11g Administration Guide

Monday, April 25, 2011

Performance Tuning Tips for OIM

Escalations in OIM are typically related to performance issues; however, performance problems can be prevented by following some common recommended practices on how to configure OIM’s components and connectors.

This article will discuss several kinds of issues/recommendations that can cause/avoid performance problems. These are grouped in the following categories:

MEMORY RELATED ISSUES

Heap Size for the VM running OIM’s process: This is a very common question for which there is not a definitive answer for all cases. Unfortunately, this is highly dependent on how OIM is going to be used. Some companies are highly dependent on automatic provisioning and very rarely use UI driven processes. For these cases, it is important to ensure that the VM has enough memory available to process big loads of data at any given time. Most of the time we have seen 2-4 GB assigned to JVM heap as a maximum which works well for the higher automatic provisioning processes. For those companies that allow their employees to request their own resources, the value of Heap memory should be able to accommodate enough resources according to the following criteria:

CONNECTOR RELATED

Provisioning is a very complex problem to solve in companies. OIM inherits a little bit of that complexity in order to simplify in some degree the mechanisms that tackle this complex problem. Connectors are right in the middle of the storm when it comes to addressing this complexity because they are the cornerstone for account provisioning to target systems, which are as numerous and diverse as the needs of all lines of business in which OIM operates. Customer environments are almost always comprised of a mixture of commercial software systems and in-house built applications which most often than not have very specific requirements. Some things to keep in mind when working with connectors and customizing them are the following:

OIM provides multiple mechanisms to integrate customer’s business logic into their OIM implementation. Some of these mechanisms come in the form of Adapters. Adapters can be used for pre-population of process data forms and request fields, and to perform operations on the user’s data before during or after the user is inserted in the database (Entity Adapters). OIM also provided a rich set of API’s so customers can create their own UI’s to access OIM’s functionality.

Traditionally, OIM 10g customers customized the Struts Actions implementation classes whose source code was actually exposed. Even though this was very powerful, many customers ended up with poor performing UI’s. In OIM 11g, customizing the Out of the Box Web Console beyond adding cosmetic changes like adding logos or changing labels and such is no longer a possibility. If customers require special handling of data that is not supported by the out of the box Web Console in OIM 11g, then using OIM’s APIs from a custom UI is the only approach. Some recommendations that apply to customizations are the following:

This article will discuss several kinds of issues/recommendations that can cause/avoid performance problems. These are grouped in the following categories:

- Memory Related

- Connector Related

- Customization Related

MEMORY RELATED ISSUES

Heap Size for the VM running OIM’s process: This is a very common question for which there is not a definitive answer for all cases. Unfortunately, this is highly dependent on how OIM is going to be used. Some companies are highly dependent on automatic provisioning and very rarely use UI driven processes. For these cases, it is important to ensure that the VM has enough memory available to process big loads of data at any given time. Most of the time we have seen 2-4 GB assigned to JVM heap as a maximum which works well for the higher automatic provisioning processes. For those companies that allow their employees to request their own resources, the value of Heap memory should be able to accommodate enough resources according to the following criteria:

- Concurrency: The higher this gets the more memory will be required. Unfortunately it is very hard to determine an actual number but 4 GB seems reasonable for high concurrency implementations.

- Complexity of the Data Schema for the resources: If the provisioned resources available in the implementation have a lot of data dependencies on third party systems or the Process Forms are very intricate or have a lot of Child Tables associated. This will require more memory to represent requests for these resources. The recommendation is to always try to simplify as much as possible the design of your process forms and resource objects schemas.

- Complexity of the Approval processes if any: The need to open connections to third party systems to search for approvers for instance or determine the destination of an approval request affects the memory requirements as well, especially in high concurrency systems. In 11g this is not a factor because these requirements are implemented in SOA Composites handled by a SOA Server.

- Size of Metadata and Configuration Data (Lookups): Be very mindful of how much information is represented as lookups or configuration data. Some customers decided to store corporate groups as Lookup Fields which is causing hundreds of thousands of entries being pulled out by lookup fields. This is a potential performance problem since OIM’s implementation of Lookup Fields executes Lookup queries to pre-populate the list of values displayed to users for selection. There is no database driven pagination, pagination actually happens in the UI. This affects in a significant way, the amount of memory required and customers should be very careful as to how they represent data associated with the provisioned resources and how this data is retrieved and presented to users in the UI.

CONNECTOR RELATED

Provisioning is a very complex problem to solve in companies. OIM inherits a little bit of that complexity in order to simplify in some degree the mechanisms that tackle this complex problem. Connectors are right in the middle of the storm when it comes to addressing this complexity because they are the cornerstone for account provisioning to target systems, which are as numerous and diverse as the needs of all lines of business in which OIM operates. Customer environments are almost always comprised of a mixture of commercial software systems and in-house built applications which most often than not have very specific requirements. Some things to keep in mind when working with connectors and customizing them are the following:

- Understand the life cycle of adapters and connectors: This is very important, because many times customers and integrators forget about this and implement customizations in a way that is not suitable to the dynamics of OIM’s component life cycles. Implementing resource pools inside adapters is not a good practice, and it is futile because adapters are instantiated per request and are totally stateless. If adapters need to make use of centralized resources, consider leveraging application server resources like JDBC data sources for instance. This will keep adapters separate from the management of such shared resources and will be able to make use of those resources even across multiple adapter instantiations.

- When it comes to reconciliation implemented by connectors. Reconciliation uses JMS resources to process messages. To enhance performance customers usually configure distributed JMS destinations when supported by the hosting application server. Some servers have a feature called Server Affinity, which when enabled, prevent the load balancing of messages across multiple physical servers having destinations as part of the distributed JMS destination. In WebLogic this can be turned off, allowing multiple JMS producers to distribute messages to physical destinations present on different servers from the one the producer is sitting on. This truly allows big clusters to be leveraged for large reconciliations. This in conjunction with increasing the number of MDB threads processing reconciliation event messages can enhance the performance of large scale systems. For details check the following link: http://download.oracle.com/docs/cd/E12840_01/wls/docs103/jms_admin/advance_config.html#wp1076348

OIM provides multiple mechanisms to integrate customer’s business logic into their OIM implementation. Some of these mechanisms come in the form of Adapters. Adapters can be used for pre-population of process data forms and request fields, and to perform operations on the user’s data before during or after the user is inserted in the database (Entity Adapters). OIM also provided a rich set of API’s so customers can create their own UI’s to access OIM’s functionality.

Traditionally, OIM 10g customers customized the Struts Actions implementation classes whose source code was actually exposed. Even though this was very powerful, many customers ended up with poor performing UI’s. In OIM 11g, customizing the Out of the Box Web Console beyond adding cosmetic changes like adding logos or changing labels and such is no longer a possibility. If customers require special handling of data that is not supported by the out of the box Web Console in OIM 11g, then using OIM’s APIs from a custom UI is the only approach. Some recommendations that apply to customizations are the following:

- Pre-Load OIM’s APIs and reuse the references as much as possible: Avoid looking up for OIM’s APIs interfaces every time an adapter needs them. This is a perfect opportunity to implement a wrapper that follows the recommendation above. Make sure you synchronize access to those references to make your code more robust and reliable.

- Know the size of your data: It is very important to know how much data will be handled by either a customized version of the Out of the Box Web Console or your own UI. Performance may be highly impacted by it and also will affect the memory requirements. See Memory Related Issues for more about this.

Labels:

OIM 11g,

Performance

Sunday, April 24, 2011

SSL offloading and WebLogic server

A couple of weeks ago I wrote about using Apache to simulate an SSL load balancer and showed this diagram:

One of the important things to note is that by default in this architecture WebLogic and any J2EE applications won't know that the user is using SSL to access the server because any calls to HttpServletRequest.isSecure() will return false! There is a solution though - two configuration directives in the Weblogic web server plug-ins (mod_wl in Apache and OHS) allow you to tweak the behavior. Those directives are WLProxySSL and WLProxySSLPassThrough.

One of the important things to note is that by default in this architecture WebLogic and any J2EE applications won't know that the user is using SSL to access the server because any calls to HttpServletRequest.isSecure() will return false! There is a solution though - two configuration directives in the Weblogic web server plug-ins (mod_wl in Apache and OHS) allow you to tweak the behavior. Those directives are WLProxySSL and WLProxySSLPassThrough.

Friday, April 22, 2011

Authenticated Sessions and WebLogic (including clusters)

When you write a J2EE app or use any of the technologies that are built on top of J2EE some aspects of what happens underneath you are one step removed from magic. That's great when you're in the development process, but when you get closer to production you may need pull back the curtain a bit so you can plan properly.

Let's say you have a very simple Servlet that does two things: tells you who you are and counts the number of times you've loaded the servlet. Something like this:

Well for that you need to deploy a cluster. There's a whole lot of info out there about WebLogic Server clustering, so I'm not going to go into the details of how the AdminServer, managed servers and clusters work. This is a blog about security stuff in the Fusion stack so you didn't come here to read about managed servers and clusters anyway. The only reason I'm writing about all of this is because there are some aspects of this that affect security in a way you might not have thought of until now.

package project1;

import java.io.*;

import javax.servlet.*;

import javax.servlet.http.*;

public class TestServlet extends HttpServlet {

public void doGet(HttpServletRequest request,

HttpServletResponse response) throws ServletException,

IOException {

PrintWriter out = response.getWriter();

out.write("Username: " + request.getRemoteUser() + "\n");

HttpSession session = request.getSession(true);

Integer iCount = 0;

if (!session.isNew()) {

iCount = (Integer)session.getAttribute("count");

}

iCount++;

session.setAttribute("count", iCount);

out.write("Count: " + iCount);

}

}

And let's say that you protect the app with Basic authentication, like so:

<?xml version = '1.0' encoding = 'ISO-8859-1'?>

<web-app xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://java.sun.com/xml/ns/javaee http://java.sun.com/xml/ns/javaee/web-app_2_5.xsd"

version="2.5" xmlns="http://java.sun.com/xml/ns/javaee">

<servlet>

<servlet-name>TestServlet</servlet-name>

<servlet-class>project1.TestServlet</servlet-class>

<load-on-startup>0</load-on-startup>

</servlet>

<servlet-mapping>

<servlet-name>TestServlet</servlet-name>

<url-pattern>/*</url-pattern>

</servlet-mapping>

<security-constraint>

<web-resource-collection>

<web-resource-name>all</web-resource-name>

<url-pattern>/*</url-pattern>

</web-resource-collection>

<auth-constraint>

<role-name>allusers</role-name>

<role-name>allusers</role-name>

</auth-constraint>

</security-constraint>

<login-config>

<auth-method>BASIC</auth-method>

<realm-name>Session Test</realm-name>

</login-config>

<security-role>

<role-name>allusers</role-name>

</security-role>

</web-app>

When you hit the app via HTTP the server will see that you haven't authenticated and will respond with a 401 and your browser will pop up a Basic auth box.

The next time you make an HTTP request and this time include your credentials the server will:

- validate the creds

- see that you don't have a session

- create a session for the user

- squirrel the session away in memory on that server (i.e. in that JVM)

- issue a cookie named JSESSIONID

- and finally it will it execute the servlet.

Well for that you need to deploy a cluster. There's a whole lot of info out there about WebLogic Server clustering, so I'm not going to go into the details of how the AdminServer, managed servers and clusters work. This is a blog about security stuff in the Fusion stack so you didn't come here to read about managed servers and clusters anyway. The only reason I'm writing about all of this is because there are some aspects of this that affect security in a way you might not have thought of until now.

Thursday, April 21, 2011

Developing Workflows to OIM 11g - the basics

OIM & BPEL Working together?

OIM 11g release brought us the powerful world of Oracle BPEL based workflows: from this release on, Oracle BPEL is the workflow engine to be used by OIM in all sorts of requests and their related approval processes. While this integration makes OIM workflows way more powerful and flexible when compared to OIM 9.x, the development process is quite different. The idea for this article is to provide tips for making the development process more straightforward.

First let’s take a look in the main development steps for having a new workflow:

1. Generating basic workflow: OIM provides an utility that can be used to generate a JDeveloper project that contains a basic BPEL Workflow process:

‘ant -f new_project.xml -f new_project.xml’

The ‘new_project.xml’ is located at $OIM_HOME/server/workflows/new-workflow.

You have to provide the application name (which will become the JDeveloper Applciation Name), the project name (which will become the JDeveloper project in the application) and the process name (which needs to be unique across applications and will be the BPEL process name).

The command line will generate a JDeveloper application and you can copy it to wherever your JDeveloper is installed and start working on your customizations.

OIM 11g release brought us the powerful world of Oracle BPEL based workflows: from this release on, Oracle BPEL is the workflow engine to be used by OIM in all sorts of requests and their related approval processes. While this integration makes OIM workflows way more powerful and flexible when compared to OIM 9.x, the development process is quite different. The idea for this article is to provide tips for making the development process more straightforward.

First let’s take a look in the main development steps for having a new workflow:

1. Generating basic workflow: OIM provides an utility that can be used to generate a JDeveloper project that contains a basic BPEL Workflow process:

‘ant -f new_project.xml -f new_project.xml’

The ‘new_project.xml’ is located at $OIM_HOME/server/workflows/new-workflow.

You have to provide the application name (which will become the JDeveloper Applciation Name), the project name (which will become the JDeveloper project in the application) and the process name (which needs to be unique across applications and will be the BPEL process name).

The command line will generate a JDeveloper application and you can copy it to wherever your JDeveloper is installed and start working on your customizations.

Labels:

11g,

BPEL,

deployment,

OIM,

oracle identity manager,

soa,

workflow

Wednesday, April 20, 2011

OAM 11g Logout Part One (of two)

This post is part of a larger series on Oracle Access Manager 11g called Oracle Access Manager Academy. An index to the entire series with links to each of the separate posts is available.

In my last two posts on OAM I discussed OAM 11g login and cookies and OAM 11g session management and in both I cover the logout process next.

There's an entire chapter dedicated to the subject of logout in the OAM documentation. The following by no means replaces that chapter. Instead, like the rest of the series of posts, it is intended to give you a simpler to understand recap of the most important points in that chapter in a more understandable form.

To quickly recap what I covered previously: when you log into OAM you get two different kinds of cookies:

- one called OAM_ID one for the OAM servers

- one for each Web Server + WebGate you access (OAM_AuthnCookie_hostname:port)

- Simple central logout with default final page

- Simple central logout with custom final page

- WebGate initiated logout

Labels:

oam,

oam 11g academy

Thursday, April 14, 2011

Watch out those code source grants

Code source grants are policies governing the rights of code running in a JVM (Java Virtual Machine). In this article, I talk about 3 common implementation issues when dealing with code source grants in a Weblogic/OPSS (Oracle Platform Security Services) environment.

Code requiring grants are one of the protection mechanisms offered in the Java platform since Java SE 1.2. Java SE 1.2 improved the previous versions by allowing a fine-grained authorization model, opposed to the all-or-nothing model in Java SE 1.0 and 1.1.

In a nutshell, if you want to only allow code from a given jar file to read/write to a file in tmp folder, you can specify the following in one of the java.policy files:

The code base grant along with the permission define what is known as a protection domain.

Then when you run the JVM with the security manager enabled (-Djava.security.manager), any code attempting to read/write to /tmp folder will be checked if it has been granted the permission.

Fair enough.

OPSS does not use the standard java.policy. Instead, it implements JAAS by looking at the configured policy store repository (system-jazn-data.xml by default) as the policy store for code source grants (as well as principal-based grants). Here’s a snippet:

Lines 28-43 define the code source grant in OPSS standards. Notice it actually resembles a plain java code grant pretty well. This specific grant allows any code deployed under file:${domain.home}/servers/${weblogic.Name}/tmp/_WL_user/MyApp to getApplicationPolicy in the MyApp application policy context.

This leads us to issue #1.

In system-jazn-data.xml, principal-based grants are application-scoped, meaning they’re meaningful for a specific application and are defined within the <application> element. Code source grants are referred to as system grants and are defined in the outermost <jazn-policy> element, outside of any <application> element. OPSS is very strict when it comes to policies in LDAP. It won’t accept system grants in application scope.

And if you use the embedded Weblogic server in JDeveloper, applications are read from another location: ${domain.home}/../o.j2ee/drs/<application-name>

As a result, code source grants in the policy files must be aligned to the server staging mode.

<application-name> is the actual deployed application name.

It’s a good idea to have something in the build system to target the system grants appropriately to your actual testing and production environments.

However, the JVM gives us the option of calling the secured API inside an AccessController.doPrivileged block. In this way the JVM gets signaled to not check permissions further up in the call chain. In other words, the protection domain containing that block gets entitled to execute the operation on behalf of anyone calling it without the need of granting anyone else. Here’s some sample code:

Lines 5-10 make the getAplicationPolicy method executable by granting only the corresponding protection domain (and nobody else) in the policy store, exactly as in the system-jazn-data.xml shown above.

AccessController.doPrivileged expects either a PrivilegedAction or a PrivilegedExceptionAction type. Both define the run method, where the protected API call is to be made.

It is important that such an approach gets combined with other protection mechanisms, otherwise other code deployed in the container could leverage the privileged code in some “uncool” manner. And if you make that code available to external clients, make sure those interfaces are properly secured (well, this also applies to any sensitive aspect that you expose, regardless of AccessController.doPrivileged usage).

Code requiring grants are one of the protection mechanisms offered in the Java platform since Java SE 1.2. Java SE 1.2 improved the previous versions by allowing a fine-grained authorization model, opposed to the all-or-nothing model in Java SE 1.0 and 1.1.

In a nutshell, if you want to only allow code from a given jar file to read/write to a file in tmp folder, you can specify the following in one of the java.policy files:

1: grant codeBase “file://MyApp/MyJar.jar” 2: { 3: permission java.io.FilePermission “/tmp/*” “read/write”; 4: }Then when you run the JVM with the security manager enabled (-Djava.security.manager), any code attempting to read/write to /tmp folder will be checked if it has been granted the permission.

Fair enough.

OPSS does not use the standard java.policy. Instead, it implements JAAS by looking at the configured policy store repository (system-jazn-data.xml by default) as the policy store for code source grants (as well as principal-based grants). Here’s a snippet:

1: <jazn-data>

2: <policy-store>

3: <applications>

4: <application>

5: <name>MyApp</name>

6: <jazn-policy>

7: <grant>

8: <grantee>

9: <principals>

10: <principal>

11: <class>oracle.security.jps.internal.core.principals.JpsAuthenticatedRoleImpl</class>

12: <name>authenticated-role</name>

13: </principal>

14: </principals>

15: </grantee>

16: <permissions>

17: <permission>

18: <class>oracle.adf.share.security.authorization.RegionPermission</class>

19: <name>trunk.pagedefs.SearchPageDef</name>

20: <actions>view</actions>

21: </permission>

22: </permissions>

23: </grant>

24: </jazn-policy>

25: </application>

26: </applications>

27: </policy-store>

28: <jazn-policy>

29: <grant>

30: <grantee>

31: <codesource>

32: <url>file:${domain.home}/servers/${weblogic.Name}/tmp/_WL_user/MyApp/-</url>

33: </codesource>

34: </grantee>

35: <permissions>

36: <permission>

37: <class>oracle.security.jps.service.policystore.PolicyStoreAccessPermission</class>

38: <name>context=APPLICATION,name=MyApp</name>

39: <actions>getApplicationPolicy</actions>

40: </permission>

41: </permissions>

42: </grant>

43: </jazn-policy>

44: </jazn-data>

Lines 28-43 define the code source grant in OPSS standards. Notice it actually resembles a plain java code grant pretty well. This specific grant allows any code deployed under file:${domain.home}/servers/${weblogic.Name}/tmp/_WL_user/MyApp to getApplicationPolicy in the MyApp application policy context.

This leads us to issue #1.

Code source grants must be defined separately from principal-based grants.

In system-jazn-data.xml, principal-based grants are application-scoped, meaning they’re meaningful for a specific application and are defined within the <application> element. Code source grants are referred to as system grants and are defined in the outermost <jazn-policy> element, outside of any <application> element. OPSS is very strict when it comes to policies in LDAP. It won’t accept system grants in application scope.

Observe Weblogic server staging mode.

Within a grant definition, the codesource url element refers to its physical location in the file system. Weblogic server deploys bits into different locations depending on its staging mode. In stage mode, application bits are copied from the AdminServer to a specific directory in the managed servers. By default it is ${domain.home}/servers/${weblogic.Name}/tmp/_WL_user/<application-name>/ (or whatever specified by the staging directory name attribute). In nostage mode, applications are, by default, laid down at ${domain.name}/servers/AdminServer/upload/<application-name>/.And if you use the embedded Weblogic server in JDeveloper, applications are read from another location: ${domain.home}/../o.j2ee/drs/<application-name>

As a result, code source grants in the policy files must be aligned to the server staging mode.

<application-name> is the actual deployed application name.

It’s a good idea to have something in the build system to target the system grants appropriately to your actual testing and production environments.

Know about AccessController.doPrivileged when invoking protected APIs

The JVM security model correctly takes a conservative approach when evaluating code source grants in the sense that it does not allow a piece of code to “do stuff” in behalf of other another piece of code unless explicitly stated by the application developer. Imagine if you had the rights to delete the contents of a folder and did that upon anyone’s request. Bad things would happen. So the JVM enforces the concept of a trusted path, meaning that whenever a secured operation is called, a permission check is made against all protection domains in the call chain in that executing thread. And this means every protection domain in that call chain must be granted the permission in the policy store. Obviously, the correct set of grants can get very tricky, especially if you’re writing code that is invoked as part of some framework that is also invoked off another framework... You can spend a considerable amount of work figuring out the right code source URLs.However, the JVM gives us the option of calling the secured API inside an AccessController.doPrivileged block. In this way the JVM gets signaled to not check permissions further up in the call chain. In other words, the protection domain containing that block gets entitled to execute the operation on behalf of anyone calling it without the need of granting anyone else. Here’s some sample code:

1: JpsContextFactory f = JpsContextFactory.getContextFactory(); 2: JpsContext c = f.getContext(); 3: final PolicyStore ps = c.getServiceInstance(PolicyStore.class); 4: final String appId = "MyApp"; 5: ApplicationPolicy ap = AccessController.doPrivileged(new 6: PrivilegedExceptionAction<ApplicationPolicy>() {

7: public ApplicationPolicy run() throws PolicyStoreException { 8: return ps.getApplicationPolicy(appId); 9: } 10: }, null); 11: // Continue with the ap object for policy operations.Lines 5-10 make the getAplicationPolicy method executable by granting only the corresponding protection domain (and nobody else) in the policy store, exactly as in the system-jazn-data.xml shown above.

AccessController.doPrivileged expects either a PrivilegedAction or a PrivilegedExceptionAction type. Both define the run method, where the protected API call is to be made.

It is important that such an approach gets combined with other protection mechanisms, otherwise other code deployed in the container could leverage the privileged code in some “uncool” manner. And if you make that code available to external clients, make sure those interfaces are properly secured (well, this also applies to any sensitive aspect that you expose, regardless of AccessController.doPrivileged usage).

Labels:

AccessController,

codesource,

grant,

opss,

weblogic

Tuesday, April 12, 2011

Extending the OID 11g schema via ldapmodify

I recently said the following to someone in an IMversation:

ldapsearch and ldapmodify are about 10 times better than a stupid GUI because you can script everything.I think if I had a personal motto it would be something like "if I can't script it then I'm not interested." (well that or "oh look, shiny!") I recently had to extend my LDAP schema of an OID 11g directory and I didn't have access to the ODSM GUI (for reasons that aren't important). So I had to extend the schema via ldapsearch/ldapmodify (i.e. the hard way) and thought I'd write down my steps to save you the trouble of figuring it out for yourself.

it's like the difference between knowing SQL and having to use TOAD or Access (*shudder*) to add rows to a db table

Monday, April 11, 2011

OAM 11g session management

This post is part of a larger series on Oracle Access Manager 11g called Oracle Access Manager Academy. An index to the entire series with links to each of the separate posts is available.

Yesterday's post on OAM 11g SSO and Cookies discussed how login works in OAM and the HTTP Cookies you will see. There is one little detail that I left out so as to not make the discussion too complicated. Specifically I left out the OAM server side session tracking.

In OAM 10g and other products in the WAM space there is no actual tracking of a user's session. Usually in those products when a user logs in they are issued an encrypted cookie that tracks the login time, authentication level, the idle and maximum session times and a few other bits of information. If a user had such a cookie they were logged in, if they didn't they weren't. This sort of architecture was designed in a time when building massively scalable session tracking mechanisms wasn't really possible; in other words there was no way to build a million concurrent user SSO scheme deployed worldwide if you had to keep track of every active user session in a database or LDAP directory.

Times have changed.

OAM 11g takes advantage of a cool technology called Oracle Coherence. I'd tell you what Coherence does, but they do a pretty good job right there:

Coherence provides replicated and distributed (partitioned) data management and caching services on top of a reliable, highly scalable peer-to-peer clustering protocol. Coherence has no single points of failure; it automatically and transparently fails over and redistributes its clustered data management services when a server becomes inoperative or is disconnected from the network. When a new server is added, or when a failed server is restarted, it automatically joins the cluster and Coherence fails back services to it, transparently redistributing the cluster load. Coherence includes network-level fault tolerance features and transparent soft re-start capability to enable servers to self-heal.By plugging Coherence into the OAM architecture Oracle added the ability of the OAM Server to track all active users sessions without needing to go back to a massive central store (for example a database) and without needing to worry about building a replication strategy. Coherence hides all of that complexity and solves what is still a massive problem for some of our competitors. In the sequence diagram in my previous post I only drew the lines for HTTP traffic. I left out a bunch of stuff like the OAP communication from WebGate to OAM Server and the fact that the OAM Server will check that the session is active and legal before granting access. The more accurate, but still simplified, OAM architecture diagram looks more like this: Each time the OAM WebGate talks to the OAM Server to ask "is the user authorized to see this resource?" the OAM Server checks the Coherence cache and will say "NO!" if the session has been deleted. So if you want to terminate an user's session you can! Open the OAM Console, go to the System Configuration tab, expand System Utilities and open the Session Management page. Then just type in the username you want to search for and hit enter or the go button. OAM will show you all of the active sessions for that user... like so: You can select one or more sessions and terminate them by hitting the little X button. OAM will give you one more chance to review what you're about to do: If you hit YES the user is kicked out and will have to login anew the next time they access anything. Now that we've covered login, cookies and session management in OAM 11g I promise the NEXT post I do will cover Logout!

Labels:

oam,

oam 11g academy

Subscribe to:

Posts (Atom)